Make Web Development Fun Again

How we accumulated so much cruft, and how we can streamline our workflow for cleaner, more efficient results

by Joe Honton

by Joe Honton

If you're a frontend web developer, you've probably retooled more than once in the past year. New advances in HTML, CSS and JavaScript mean new opportunities to improve upon old ways of doing things. This is good. We welcome these changes.

But these changes require us to rethink how we go about our work. Lessons that we learned the hard way, through trial and error, have to be relearned all over again.

Meanwhile, open source projects and tool vendors continuously release new versions of their software. The entire ecosystem frantically works to keep pace with advances in the underlying technology. So for those of us slinging code, keeping up-to-date with the frontend stack and its workflow tools is more than a part-time effort.

But wait. Remember when it wasn't so complicated? When there weren't preprocessors, transpilers, and bundlers to convert our fancy new code into lowest common denominator output. When we didn't need task runners and a pipelined workflow to stay sane. When we didn't have to generate sourcemaps just to use a debugger without mangled code. When the framework we use didn't mix everything together in polyglot fashion. Back when things were just simpler.

Remember when it was all so much fun?

Recently I've been having a lot of fun with web development. That's because I've learned to simplify my workflow.

It's all about cutting out the cruft. Now I spend less time on overhead and more time being creative. Allow me to share how I got to this point.

The Golden Era

We all know that a lot has happened in a short space of time. So the history of how we got to where we are today is fresh in our collective memories. Still, it's central to my thesis about simplification and cutting out the cruft. So a backward glance will help to tell the story.

It all started with HTML — no CSS, no JavaScript — just a short list of tags for structuring layout, emphasizing words, and hyperlinking between docs.

Then, CSS and JavaScript arrived on the scene in quick succession. They provided us with ways to work on distinct parts of the problem. Separation of concerns became our manifesto:

- HTML for semantics and content

- CSS for layout and decorations

- JavaScript for dynamic interaction

But it wasn't a perfect world. We had to wrestle with browser incompatibilities. For a while we ignored the differences and just pasted "Best viewed with Internet Explorer" at the bottom of each page, letting visitors fend for themselves.

Today, these three technologies form the basis for everything we do, and every frontend developer must master them. But we learned that sometimes vendors fall behind in spec-compliance, and a little help is needed.

The Library Era

Things started to get messy when we began using JavaScript in earnest. Browser incompatibilities caused us no end of trouble. We stumbled through the learning curve of asynchronous versus synchronous while teaching ourselves how to make AJAX calls. And we had to do all of this with nothing more than document.getElementById(). Then we discovered JQuery and never looked back.

When JQuery arrived it saved us from the browser incompatibility wars. It gave us a unified interface that papered over the major browser differences. For the first time, we could deploy our code and reasonably expect that visitors would enjoy the experience we designed for them.

Today, we shudder at the thought of using JQuery, but at that time it was de rigueur. It's important to note that JQuery's rise wasn't a foregone conclusion. It got to that hall-of-fame status only after stiff competition from open source libraries like MooTools, Dojo, and YUI.

Those three libraries each packaged up a mixture of general purpose helper functions and user interface design decisions — a hodge-podge of everything you needed in an all-in-one library. In contrast, JQuery stayed focused.

JQuery started out with one spectacular feature: extending the very limited capability provided by document.getElementById(). Then, it leveraged that into a set of wrapper functions that smoothed out browser DOM inconsistencies. To top it off, it made XMLHttpRequest easy to use, exposing countless developers to the new world of asynchronous programming.

Suddenly, simple onclick callbacks were no longer good enough. Interactivity became the hot new thing. Users flocked to websites that gave them dynamic content. So the nascent <noscript> movement failed to gain traction, and the catchphrases unobtrusive JavaScript and graceful degradation soon fell out of favor. Working with HTML's DOM was no longer optional. It became an ever more important part of our work.

JQuery is considered legacy these days, for a simple reason: its original "spectacular feature" has been incorporated directly into the DOM in the form of document.querySelector(). Furthermore, its second most popular feature, the $.ajax() function, has been completely superseded by the standards-based Fetch API. Anything that could be done in JQuery, can now be done with the same number of lines of plain JavaScript.

Today we no longer need JQuery, and it shouldn't be considered for any new projects. Still, out of this experience, we learned to accept third party libraries as a necessary part of our work.

The Preprocessor Era

At the same time that HTML and the DOM were maturing, our client's desire for sophisticated styling grew. And for a while, much of what we needed to satisfy that desire was just a feeling of interactivity. Adobe Flash helped us fill that need by giving us a way to add sparkle to a client's homepage.

But soon CSS standardized two of the best Flash features: transitions and animations. Then with the new <canvas>, <audio>, and <video> tags in HTML5, the rest of the proprietary Flash feature-set seemed superfluous, and its insecure core forced its demise.

CSS became our best friend as we played with gradient backgrounds, rounded corners, and drop shadows. We began to write more and more CSS — for layout, for decorations, for typography — until it got out of hand and we needed help.

Over time we discovered the need for variable declarations in our CSS. We needed, for example, a way to adjust the colors and dimensions of our themes in one place and have those adjustments reflected across all of our stylesheets. So we began using preprocessors like Less and Sass which made this possible.

As our stylesheets became ever more complex we learned to organize our rules into self-contained files to isolate context and reduce naming conflicts. We began relying on preprocessor @import statements to bring them all together. And as we did this, we learned to accept preprocessors as a necessary part of our work.

This seemingly innocuous preprocessing step started us down the path we've been on ever since. No longer could we simply point our browser at a URL and hit the refresh button. Now, for the first time, we had to build our website.

But the W3C standard has evolved and has now overtaken much of what Less and Sass provide:

- CSS allows us to declare and use variables, such as

--width:40rem. - CSS allows us to calculate dimensions, such as

calc(var(--width)+2rem). - CSS allows us to isolate context and prevent naming conflicts within components with the

:hostand::slottedselectors. - CSS allows us to organize rules with

@import.

None of this requires a preprocessor. Everything is standards-based and future-proofed.

Today, new projects should carefully evaluate whether Less and Sass are worth the trouble. Eliminating them from our tool-chain allows us to declare the exact set of rules we need in its purest form — without any extra tooling.

The Standards Era

In the midst of all this, we began to think of websites differently. Instead of a collection of static web pages providing information, websites were becoming cloud-based applications with access to user-specific data.

Around this time Apple, Mozilla and Opera decided that it was futile to keep innovating in competitive bubbles. Instead they began working cooperatively to develop standards under the new Web Hypertext Application Technology Working Group (WHATWG). Microsoft and Google joined the common effort shortly afterwards. Their work together produced the HTML5 spec.

Up until this time, the development of standards proceeded at a glacial pace. CSS2 took a long time to arrive and provided only a modest advancement to the technology. HTML4 took even longer and arrived with a mish-mash of strict, transitional, and frameset variants.

The browser makers wisely knew that any successful effort to sort through their differences had to keep pace with their collective innovations. Delays in agreeing to a path forward would derail everything.

WHATWG embraced innovation and standards in an entirely new way. They referred to their work as a living standard. This meant that instead of a single monolithic spec that covered everything, there would be separate documents that covered specific domains. So what we casually refer to as the HTML5 standard, is in fact a collection of standards. HTML5 & Friends encompassed the core of HTML plus debugging, DOM, fetch, streams, storage, web sockets, web workers, and more.

The living standard approach was a success. So much so that it was then adopted by the World Wide Web Consortium (W3C) and its work on CSS standards. This resulted in numerous CSS3 "modules" including: colors, fonts, selectors, backgrounds and borders, multi-column layout, media queries, paged media, etc. Each of these set their own pace for moving through the standards process.

Finally, ECMA International adopted the same approach to its work on ECMA-262, the standard behind JavaScript. Its ambitious plans to upgrade the language were reformulated using a "harmony" approach. This meant that new features were to be continuously added over time. So we've enjoyed a steady stream of new language capabilities every year since 2015.

Throughout all of this, instead of lagging behind innovation and merely documenting what was already available, the new approach established the standards-making process itself as the vanguard of innovation. This eliminated much of the chaos that we previously had to endure, and has given us an orderly path forward.

All of this important standards work occurred within the public's view. And as a result, the direction we were headed was well known to all.

At this time, new features for HTML, CSS and JavaScript were being announced every month. And it whet our appetites. We liked what we were hearing, and we wanted it as soon as possible. In fact we wanted it faster than the browser makers could implement it. And here's where things started to get complicated.

In order to get ahead of the curve, we started using feature detection and polyfills in our codebase. With this technique we could determine which features were supported by the browser and which needed to be mimicked by supplying a faux work-around. This was the so-called progressive enhancement philosophy: adjust the level of sophistication to match the browser's limitations. But this approach was only practical for a limited set of things.

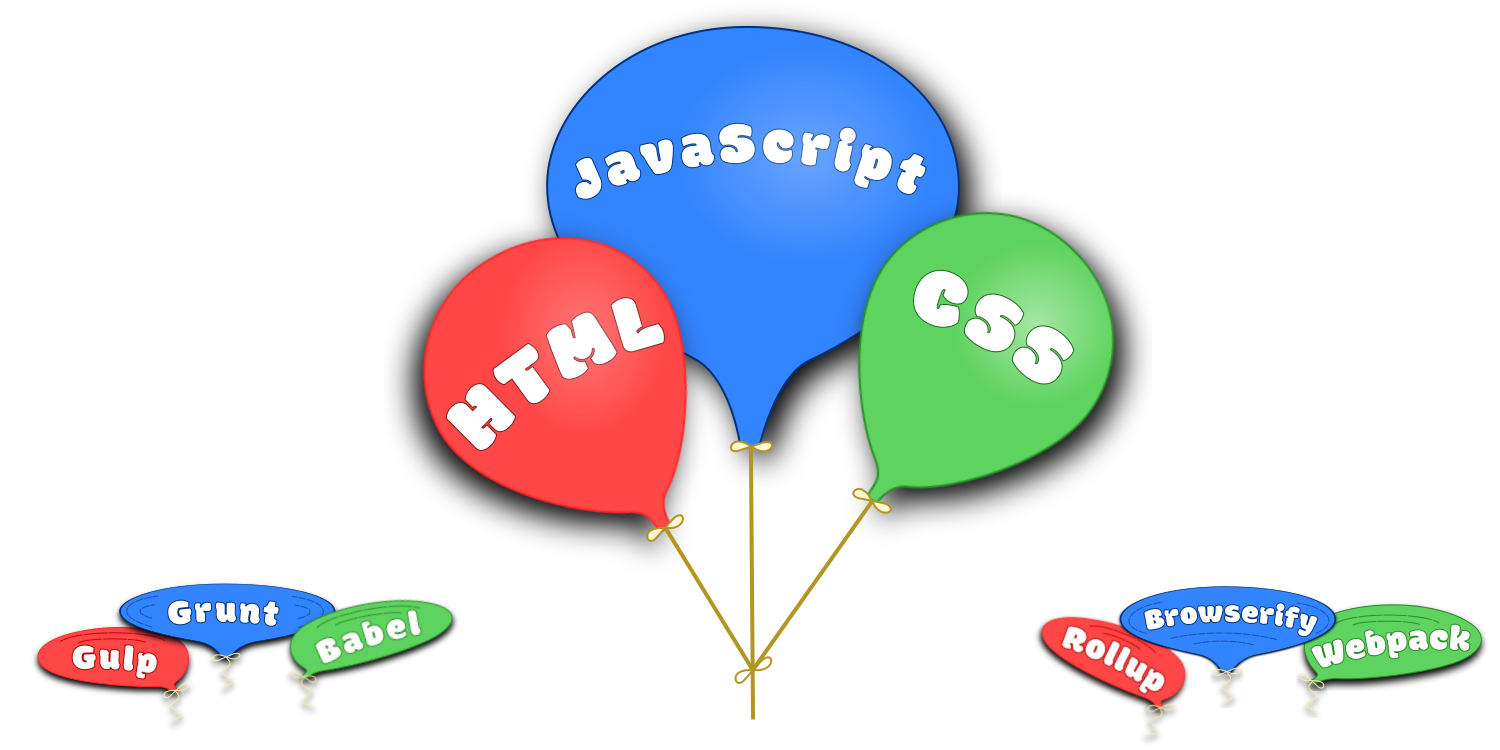

Instead, real world projects began using transpilers, and Babel became our new best friend. It allowed us to write our applications using the convenience of nextgen syntax without worrying about browser versions. So we added transpilers to our build process.

Now, finally, after all these turbulent years, the heyday of new JavaScript features is winding down. The steady pace of new "must-have" capabilities that we've been witness to is nearing its end. We now have a modern multi-paradigm language with modules, classes, iterators, generators, arrow functions, binary data, maps and sets, promises, template literals, destructuring, async/await, rest/spread, and much more.

The biggest productivity gain for many of us was moving beyond deeply nested closures. With native promises and async/await syntax, JavaScript now fully embraces asynchronous programming. Its procedural-style flow is easy-on-the-eyes.

Today, the need for transpilers has largely disappeared. Every language feature we need to write clean code is available natively. And we can write using multiple paradigms: functional programming, object-oriented programming, and imperative programming are all possible without ever resorting to transpilers.

(Just one final note about transpilers before moving on. When our preferred language is TypeScript, we can still remove Babel from our pipeline, but we need to keep tsc in the mix in order to convert our source code into browser-compatible JavaScript.)

The Module Era

The final holdout in all of this was modules. As our static websites grew into cloud-based applications, the sheer quantity of functions we created got us into trouble. Simply put, we had a scoping problem. Naming things so that they didn't clash became increasingly difficult.

As we all know, the solution arrived in the form of require and module.exports. Several open source solutions were available, spearheaded by RequireJS and Browserify, which unfortunately were not compatible. And so library developers had to choose which syntax to use for their codebase (AMD or CommonJS), and application developers had to follow their lead, or else untangle the differences using UMD (Universal Module Definition).

When ECMA's Technical Committee (TC39) got involved, it looked like this sorry state of affairs was finally on the mend. TC39 studied the problem in detail and proposed new keywords for the JavaScript language. Their module loader would use newly defined import and export syntax, which was eventually dubbed ESNext.

It turns out though that loading modules is not so easy. Getting dependency management right, dealing with circular dependencies, and handling asynchronous loading were all non-trivial problems. Making it work in Node.js was one thing, but getting it to work in the browser was much more difficult.

Frontend developers could not afford to wait for the browser makers to work through all those problems. We needed modules immediately. Fortunately, a solution was readily available — have developers write code using the new ESNext syntax, and use Babel to transpile it back to CommonJS syntax. This approach worked out well.

Today though, it is no longer necessary. Browser support for the ESNext standard is now available universally. For many projects, there is no need for transpilers.

The Bundler Era

For many frontend projects, the toolchain that we built for ourselves has gotten ever more complicated over the years. So to ease our workflow, we employed task runners like Gulp and Grunt, and bundlers like Browserify, Rollup and Webpack. When properly configured, they monitor our files for changes and trigger the necessary transformation process:

- Sass and Less are preprocessed into CSS.

- Modern JavaScript syntax is transpiled down to ES5.

- ESNext modules are repackaged as CommonJS modules.

- Linters are run to check our code for common mistakes.

- Comments and whitespace are removed with minifiers.

- Debug sourcemaps are generated to link mangled code to source code.

- Everything is concatenated into a bundle for efficient HTTP/1.1 transfer.

- Bundles are split into granular bundles for optimal network caching.

This approach has become so integral to our workflow that most of us can't imagine not doing it this way. But examine the list closely and compare it with what we've already learned.

- Preprocessors can be removed when we use the newest CSS features.

- Modern JavaScript is supported by the browser without transpiling.

- ESNext modules can be used natively, without module loaders.

- Standard HTTP caching makes bundle splitting unnecessary. *

- And transferring files over HTTP/2 persistent connections is faster than bundlers. *

* (Unfortunately, bundles are still faster than multiple small files when our server uses HTTP/1.1. So to get maximum benefit, switching to a new server with both persistent connections and caching, such as Read Write Tools HTTP/2 Server, is important for the unbundled scenario to be performant.)

The only things remaining from our original list are linters and minifiers. And since those don't need to be invoked every time we test a small change, we can delegate their use to our final deployment scripts. When this happy circumstance arises, we can get rid of the bundler that we've been using.

Voilà! We've streamlined our entire workflow down to just an editor and a browser. Make a change, press the Refresh button, and there it is — your new code in action. Now that's fun!

The Framework Era

This new approach to frontend coding is really just a return to our roots — HTML, CSS, Javascript and the separation of concerns philosophy. But does it stand up to the rigors of real websites?

For now, the answer depends on your project's architecture.

The current infatuation with frameworks places this new approach out of reach for lots of projects. The recent trend in cloud-based application development has been heavily influenced by a few big-name frameworks. Software packages like Angular, React, Vue, and Svelte have become the de facto starting points for many new front-end projects.

The React framework uses a virtual DOM in an attempt to make programming a declarative exercise. Event handling, attribute manipulation, and DOM interactions are not handled directly by the developer, but by a background transformation routine. To use React declaratively, most developers write statements using the JSX language, an amalgam of JavaScript and XML. Since browsers know nothing about JSX, it has to be transpiled to JavaScript using Babel.

On the other hand, the Angular framework doesn't use anything as far-out as JSX, but it does use template literals. These templates are written using HTML plus library-specific attributes and embedded curly-brace expressions. Before being sent to the browser Angular templates need to be compiled with the ng build CLI into incremental DOM statements.

The Vue framework also uses template literals to write HTML with embedded curly-brace expressions. Again, these need to be compiled into something usable by browsers. Most projects use either vue-template-compiler or its Webpack wrapper vue-loader.

And the Svelte framework uses template literals that look remarkably similar to Vue templates. It also shifts the compilation step from runtime to build time. Most projects use either svelte.compile or its Webpack wrapper svelte-loader.

So projects using React, Angular, Vue or Svelte won't be able to streamline their workflow all the way down to the minimalist view outlined above.

The Component Era

On the other hand, the component revolution sparked by WHATWG can make full use of everything covered above. When we develop components using the W3C standards-based approach we gain all of the benefits previously enumerated.

For those interested in this new approach to web development the key technologies to learn are:

- Custom elements

- Shadow DOM

- HTML templates

All of these are backed by standards. Plus, the good news is that components that use these standards can be written, tested, and used by others without any of the extra cruft that we worked so hard to get rid of. Simple, clean and fun!

For proof that this approach works, see how the Blue Fiddle website handles all of this. This is a professional application accessing a backend server API, created with nothing more than an IDE, the BLUEPHRASE template language, and Chrome DevTools.

And while you're there, be sure to inspect under the hood — you'll see clean code that's simple to read and fun to write.